Back | Compact Representation | Cross-media Analysis | Representation Safety

Representation Safety

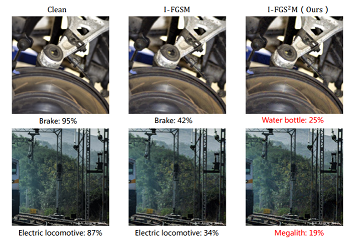

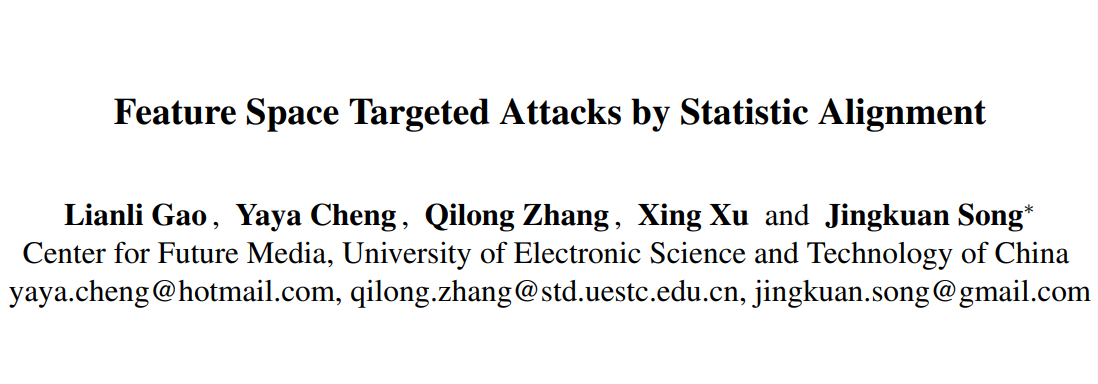

10. Practical No-box Adversarial Attacks with

Training-free Hybrid Image Transformation

Qilong Zhang, Chaoning Zhang, Chaoqun Li,

Jingkuan Song, Lianli Gao

CoRR 2022

PDF

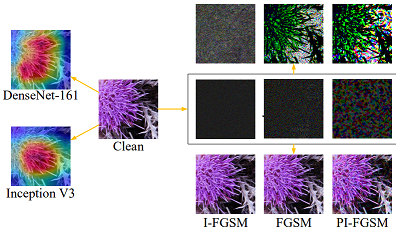

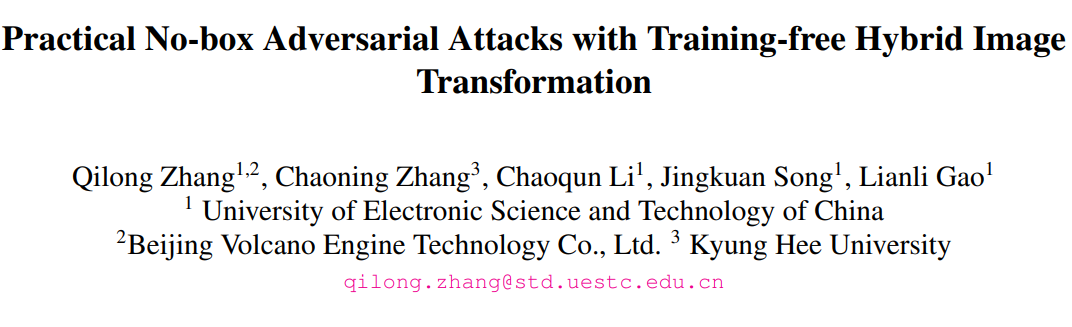

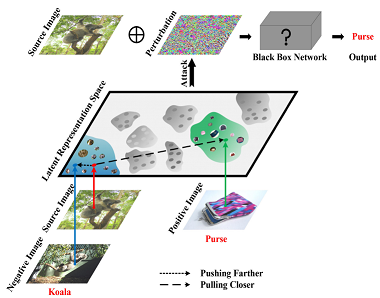

4. Push & Pull: Transferable Adversarial

Examples With Attentive Attack

Lianli Gao, Zijie Huang, Jingkuan Song, Yang

Yang, Heng Tao Shen

IEEE Trans. Multim. 2021

PDF

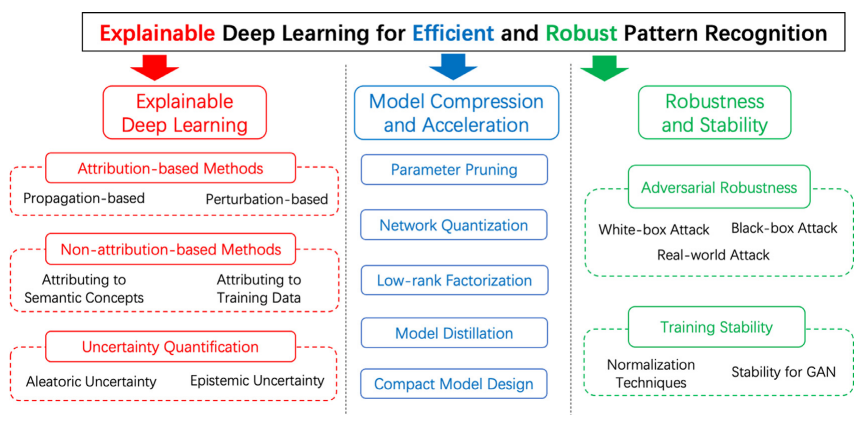

2. Explainable deep learning for efficient and

robust pattern recognition: A survey of recent developments

Xiao Bai, Xiang Wang, Xianglong Liu, Qiang Liu,

Jingkuan Song, Nicu Sebe, Been Kim

Pattern Recognit. 2021

PDF